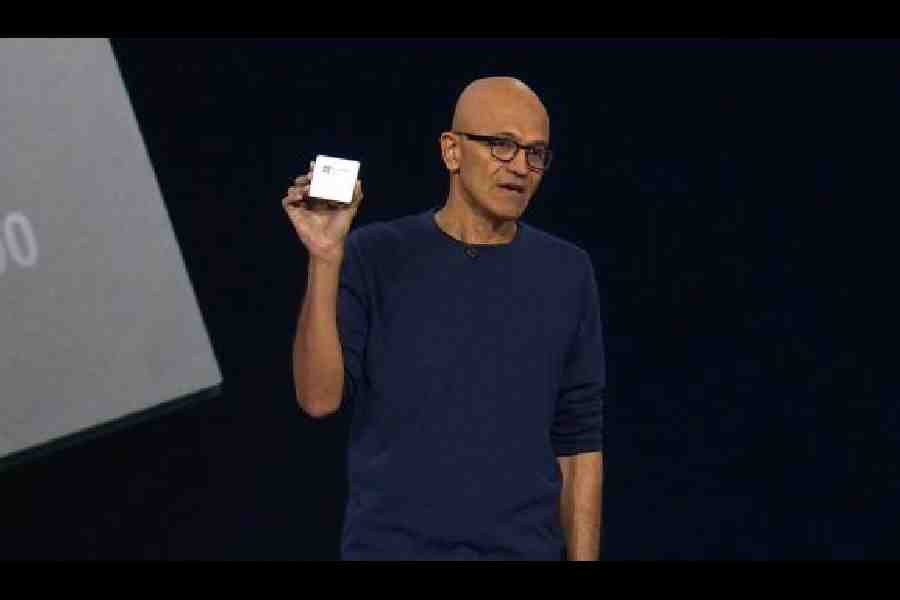

Microsoft, which is putting AI first in all its products, now has custom chips to give it an edge. At Microsoft Ignite, the company’s conference for partner developers, Microsoft announced two custom chips designed at its Redmond, Washington, silicon lab — the Azure Maia 100 AI Accelerator and Azure Cobalt 100 CPU, both arriving in 2024.

The Maia 100, named after a bright blue star, is meant for running cloud AI workloads. “Azure Maia was specifically designed for AI and for achieving the absolute maximum utilisation of the hardware,” said Brian Harry, a Microsoft technical fellow leading the Azure Maia team.

Maia will be used to power all of OpenAI’s workloads. “Since first partnering with Microsoft, we’ve collaborated to co-design Azure’s AI infrastructure at every layer for our models and unprecedented training needs,” said Sam Altman, CEO of OpenAI. “We were excited when Microsoft first shared their designs for the Maia chip, and we’ve worked together to refine and test it with our models. Azure’s end-to-end AI architecture, now optimised down to the silicon with Maia, paves the way for training more capable models and making those models cheaper for our customers.”

With 105 billion transistors, it is “one of the largest chips on 5-nanometer process technology”, the company said.

Cobalt 100 CPU is an Arm-based chip designed to handle general-purpose workloads. Though not much has been said about the chip, its Arm-based design ensures it is optimised to maximise performance per Watt, so data centres get more computing power for each unit of energy consumed.

“The architecture and implementation is designed with power efficiency in mind,” said Wes McCullough, corporate vice-president of hardware product development. “We’re making the most efficient use of the transistors on the silicon. Multiply those efficiency gains in servers across all our data centres, it adds up to a pretty big number.”

The company has said Cobalt 100 is a 64-bit processor that has 128 computing cores on die and it can achieve a 40 per cent reduction in power consumption compared to other ARM-based chips that Azure has been using.

The other two big Cloud vendors, Google and Amazon already have custom chips. Google has its custom silicon Tensor Processing Unit or TPU and Amazon has Graviton, Trainium and Inferentia.

Special AI chips from Cloud providers will help meet demand when there’s a GPU shortage but Microsoft has no plans to let companies buy servers containing their chips, unlike Nvidia or AMD.